Hey 👋

I’m Oliver Patel, author and creator of Enterprise AI Governance.

This free newsletter delivers practical, actionable, and timely insights for AI governance professionals.

My goal is simple: to empower you to understand, implement, and master AI governance.

If you haven’t already, sign up below and share it with your colleagues. Thank you!

A lot has changed with respect to U.S. AI policy in recent months; and it can be hard for AI governance professionals to keep up.

To help navigate these recent shifts, this article provides a comprehensive, up-to-date, and accessible overview of U.S. federal AI law and policy.

It does not cover U.S. state AI laws and initiatives, which will be the focus of next week’s edition of Enterprise AI Governance.

Despite lacking comprehensive EU-style regulation, the U.S. does have several important AI laws. In fact, there have been dozens of federal laws, regulations, and initiatives on AI, many of which will be discussed below.

Aside from President Biden’s (ultimately unsuccessful) attempts to initiate private sector AI regulation, the U.S. government’s core focus in recent years has been on maintaining and promoting U.S. AI leadership, restricting the export of AI-related technologies, and encouraging responsible and innovative federal government use of AI.

The Trump administration, which is primarily concerned with strengthening “U.S. global AI dominance”, is pivoting away from the Biden administration’s AI governance and safety agenda. However, this does not mean that AI governance is completely off the menu. Its importance was stressed in a recent memorandum published by the White House’s Office of Management and Budget, which described effective AI governance as ”key to accelerated innovation”.

This overview covers:

✅ U.S. global AI leadership and the race with China

✅ Biden’s AI governance agenda

✅ Trump’s agenda: what ‘America First’ means for AI

✅ AI export controls and investment restrictions

✅ Federal government use and acquisition of AI

✅ NIST and the U.S. AI Safety Institute

✅ What’s coming next?

U.S. global AI leadership and the race with China

The U.S. is the undisputed global AI leader, by nearly every metric. The global AI industry is dominated by U.S. companies, AI models, and hardware. Here are some stats from Stanford’s 2025 AI Index Report, to illustrate the point:

In 2024, U.S. private investment in AI was $109 billion. In contrast, it was $9.3 billion in China and $4.5 billion in the UK.

U.S. organisations produced 40 ‘notable’ AI models in 2024, significantly more than China’s 15 and Europe’s 3.

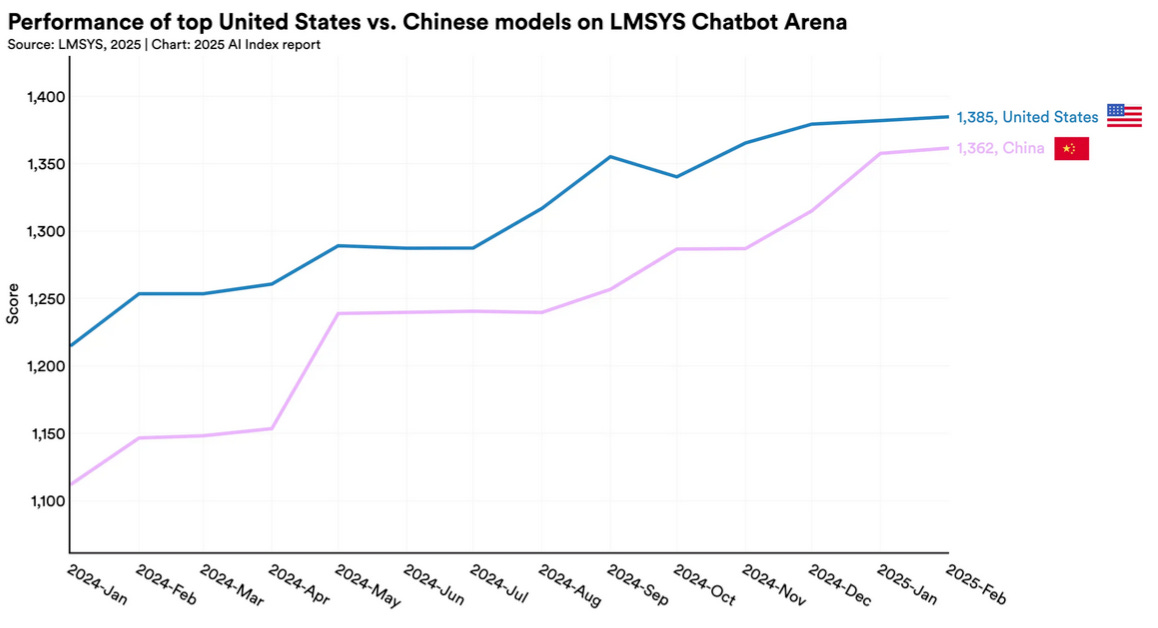

However, in some areas, China is quickly catching up and the U.S. is taking nothing for granted. For example, although U.S. AI model production output is higher, China’s advanced AI models are getting closer to U.S. models in terms of quality (see chart below).

Source: Stanford AI Index Report 2025 [original]

DeepSeek recently demonstrated that it can develop AI models, which have similar performance capabilities to leading U.S. models, at a fraction of the cost. This prompted a sell-off in U.S. tech stocks, with the S&P 500 falling 1.5% on the day DeepSeek released its open-source R1 model.

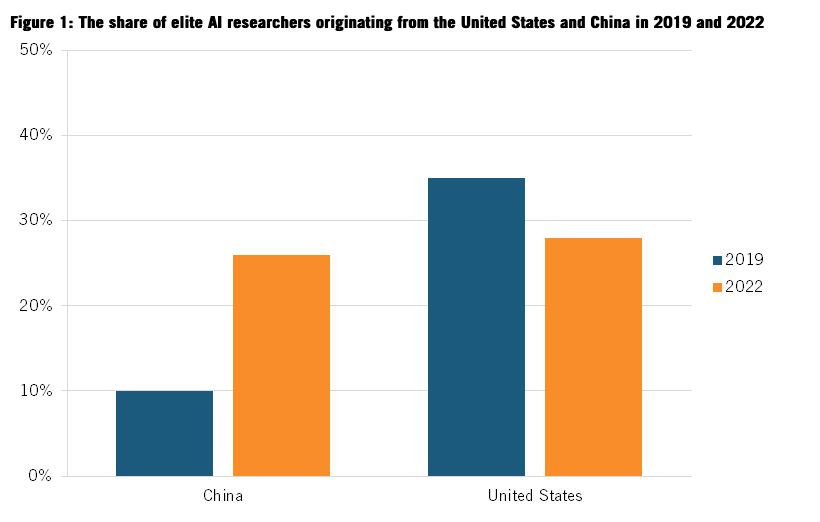

Furthermore, China is charging ahead in AI talent, research, and patent filing. Increasing numbers of “top-tier” AI researchers originate from China (see chart below), with the share originating from the U.S. declining in recent years. Also, 300,510 AI-related patents were filed in China in 2024, compared with 67,773 in the U.S.

Image source: Information Technology & Innovation Foundation, 2025 [original]

These trends explain one of the core drivers behind much of the U.S. AI policy agenda in recent years, from AI export controls and investment restrictions, to substantial support for AI infrastructure funding.

Although there is no comprehensive federal AI law, like the EU AI Act, there have been a number of federal laws and initiatives, across the past few administrations, which seek to maintain and strengthen the U.S. position of global leadership.

Some of the most relevant laws include:

Executive Order 14141: Advancing United States Leadership in AI Infrastructure

This Executive Order was signed by President Biden in January 2025. At the time of writing, it has not been revoked by President Trump.

The purpose of this Executive Order is to promote and encourage domestic AI infrastructure development, to “protect U.S. national security” and “advance U.S. economic competitiveness”. This includes using federal sites to build data centres for AI and prioritising clean energy techniques.

Enacted in August 2022, the CHIPS Act ('Creating Helpful Incentives to Produce Semiconductors') was a key pillar of Biden's AI policy.

The headline impact of this Act was to authorise and release approximately $280 billion in spending on the hardware components and infrastructure most critical for AI development.

National AI Initiative Act of 2020

This law was enacted in January 2021 as part of the National Defense Authorization Act (NDAA) for Fiscal Year 2021.

It provided over $6 billion in funding for AI R&D, education, and standards development, with the ultimate goal of strengthening U.S. AI leadership. This included a mandate which led to NIST developing the NIST AI Risk Management Framework (discussed below).

The Act also established the National AI Advisory Committee, a high-level group of experts which advise the President on AI policy matters.

Executive Order 13859: Maintaining American Leadership in AI

This Executive Order was signed by President Trump in February 2019. It remains in force today.

The purpose of this Executive Order is to promote investment and use of AI across the federal government, as well as to “facilitate AI R&D” and the development of “breakthrough technology”.

Biden’s AI governance agenda

During the Biden administration, AI governance and safety was a top policy priority. Although this appeared to signal the beginning of a shift away from the historically free market approach to technology regulation adopted by previous U.S. administrations, it did not last for too long.

President Biden attempted to balance promoting U.S. global AI leadership with upholding civil liberties and protecting citizens from unfair and harmful practices. As described throughout this article, various federal initiatives designed to strengthen the U.S.’ global position–such as on AI export controls, investment restrictions, and AI infrastructure and manufacturing spending–were complemented with initial efforts to regulate private sector AI activities and promote responsible AI.

The Blueprint for an AI Bill of Rights, for example, was developed by the White House Office of Science and Technology Policy. It outlined Biden’s AI policy vision. The Blueprint was defined by five core principles for AI development and use: i) Safe & Effective Systems, ii) Algorithmic Discrimination Protections, iii) Data Privacy, iv) Notice & Explanation and v) Human alternatives & Fallback.

The Blueprint argued that AI systems used in healthcare have “proven unsafe, ineffective, or biased” and algorithms used in recruitment and credit scoring “reflect and reproduce existing unwanted inequities or embed new harmful bias and discrimination”.

President Biden also secured ‘Voluntary Commitments’ from 15 leading AI companies. These included conducting AI model security testing and sharing and publishing information on AI safety. The core purpose of these commitments was to ensure that advanced AI models were safe before being released.

Building on these initiatives, Executive Order 14110: Safe, Secure, and Trustworthy Development and Use of AI was signed by President Biden in October 2023. This represented the most comprehensive U.S. federal AI governance initiative to date. It mandated a major programme of work, entailing over 100 specific actions across over 50 federal entities.

Tangible resulting actions included the establishment of the U.S. AI Safety Institute and the publication of various NIST AI safety standards, guidelines, and toolkits (discussed below). Also, developers of the most powerful AI models were obliged to perform safety and security testing, and report results back to the U.S. government. However, this AI model evaluation regime was never fully operationalised.

Trump’s agenda: what ‘America First’ means for AI

President Trump’s ‘America First’ mantra is not just about tariffs, defence spending, and immigration; it is also relevant for AI.

The AI policy ambition of Trump’s second term is to strengthen U.S. global AI leadership and dominance, promote AI innovation, and advance deregulation.

Two decisive actions were taken by President Trump within days of his second term commencing.

On his first day in office, 20 January 2025, President Trump signed Executive Order 14148: Initial Rescissions of Harmful Executive Orders and Actions.

The purpose of this was simple: to revoke dozens of Executive Orders and Presidential Memorandums issued by President Biden. This included revocation of Executive Order 14110: Safe, Secure, and Trustworthy Development and Use of AI, which was the cornerstone of Biden’s AI governance agenda.

The second decisive move came 3 days later, when President Trump signed Executive Order 14179: Removing Barriers to American Leadership in AI. Doubling down on the revocation of Biden’s AI Executive Order, this announcement deemed the previous administration’s wider AI policy agenda as a “barrier to American AI innovation”.

The stated policy of the new administration is to “sustain and enhance America’s global AI dominance in order to promote human flourishing, economic competitiveness, and national security”.

Trump’s Executive Order mandates formulation of an ‘AI Action Plan’, by July 2025, which can achieve this policy objective. Top U.S. officials are now working on this.

As part of this, the federal government will run an exercise to identify, halt, and shut down any AI-related government activities or initiatives which are deemed as contrary to achieving the policy objective stated above. This may include AI governance and safety related initiatives which were pursued following the mandate from Biden’s AI Executive Order.

The development of the AI Action Plan has received significant interest, with the public consultation (which has now closed) receiving 8,755 comments in under 2 months.

Despite the policy shift, it is important to note that the Trump administration has not dismantled Biden’s entire AI policy agenda, nor has it abandoned the concept of AI governance and risk management.

For example, much of what was previously implemented on AI-related export controls and investment restrictions, as well as Biden’s Executive Order on AI Infrastructure, remains in force. Furthermore, both of the recent memoranda on federal agency use and acquisition of AI, published by the Office of Management and Budget (OMB), emphasise the importance of responsible AI adoption and sound AI governance and risk management practices.

What is clear, however, is that the Trump administration has no intention to impose any major AI governance related regulations or restrictions on private sector AI development and deployment.

AI export controls and investment restrictions

One potential point of relative harmony between the Biden and Trump administrations is the stance on AI-related export controls and investment restrictions.

The U.S. deems the development of AI in “countries of concern” as a “national security threat”.

Concerted attempts to control how, where, and at what pace AI capabilities are developed has become a fundamental element of U.S. federal AI policy and the broader mission to sustain U.S. leadership in this foundational technology.

Various laws and regulations were passed during Biden’s presidency which significantly restrict which countries U.S. advanced AI computing chips and AI model weights can be exported to, as well as which countries’ AI industries U.S. persons can invest in.

The key laws are summarised below:

An interim final rule issued by the U.S. Department of Commerce’s Bureau of Industry and Security (BIS). This is the most comprehensive U.S. regulation restricting the export of AI-related technologies.

It became effective on 13 January 2025, but most requirements are not applicable until 15 May 2025, when the consultation period closes.

The purpose of this rule is twofold: to ensure that a) model weights of the most advanced ‘closed’ (i.e., not open-source) U.S. AI models are only stored outside of the U.S. “under stringent security conditions” and b) that the advanced computing chips required to develop, train, and run these models are not exported to restricted countries.

This is the first time that U.S. export controls apply to model weights. However, the restrictions on the export and transfer of model weights applies only to models trained using 10^26 or more floating point operations (FLOPS) (i.e., a significant amount of computational power).

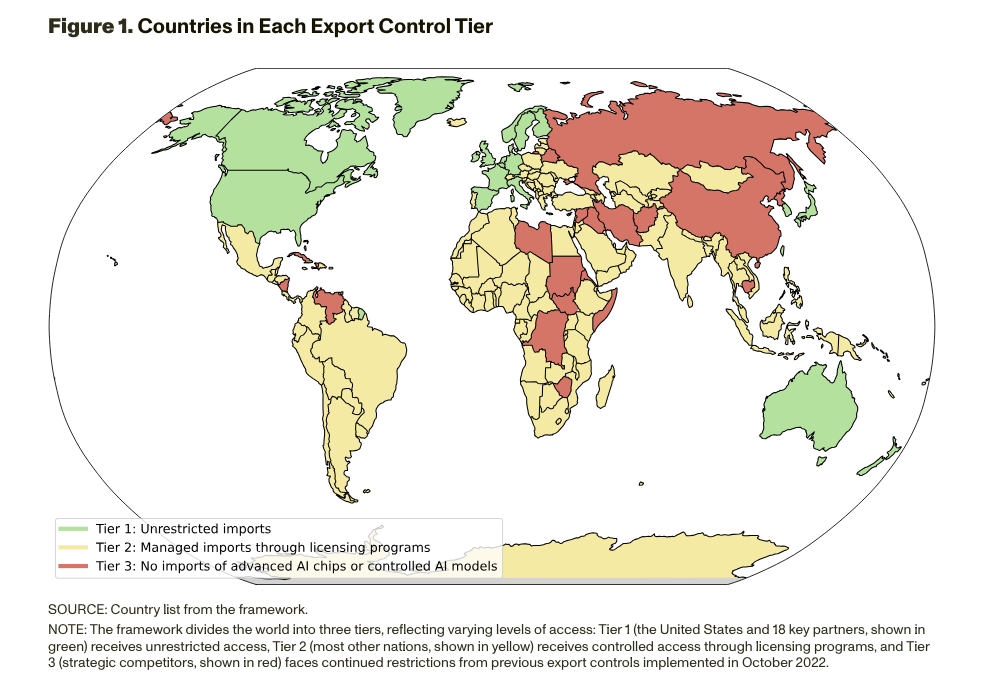

There are 3 tiers of countries. Tier 1 countries, consisting of 18 U.S. allies, can import U.S. advanced computing chips and deploy and further develop advanced closed U.S. AI models locally (subject to compliance with model storage security requirements). Tier 2 countries can only import advanced computing chips with a dedicated export license and cannot deploy and further develop advanced closed U.S. AI models locally.

Tier 3 countries, which includes arms-embargoed nations like China, Russia, Iran, and North Korea, cannot import advanced computing chips from the U.S., nor can they deploy or further develop advanced proprietary U.S. AI models locally.

Image source: RAND, 2025 [original]

The Framework for AI Diffusion builds on and significantly expands the scope of previous U.S. export control initiatives, which targeted the export and diffusion of advanced computing chips, chip design software, and semiconductor manufacturing equipment, but not model weights. Furthermore, the previous rules were more narrowly focused on adversarial countries. These laws include (but are not limited to):

Implementation of Additional Export Controls: Certain Advanced Computing and Semiconductor Manufacturing Items; Supercomputer and Semiconductor End Use; Entity List Modification

An interim final rule issued by the Bureau of Industry and Security (BIS) in October 2022.

This rule introduced export controls on advanced and high-performance computing chips, as well as items and components required for semiconductor manufacturing.

It specifically targeted exports to China, with the aim of cutting off supplies of U.S. hardware which enables advanced AI development.

Export Control Reform Act (ECRA) of 2018

The rules summarised above are based on the ECRA, which provides the U.S. government with the statutory authority to introduce export controls and restrictions on ‘dual use technology’, such as AI software and hardware.

To complement the AI export control regime, the BIS also recently issued the Final Rule on Outbound Investment Screening, which took effect in January 2025. This rule significantly restricts the ways in which U.S. persons can invest in and/or financially support advanced AI activities in Chinese or Chinese-owned companies.

The scope of this new rule is investment in companies and activities relating to “semiconductors and microelectronics, quantum information technologies, and AI”.

Various transactions are outright prohibited, such as investing in advanced AI intended for military, government intelligence, or mass surveillance. However, certain AI-related transactions and investments are permitted, but only under strict conditions, including prior notification to the U.S. government.

Federal government use and acquisition of AI

There have been a surprisingly high number of laws and initiatives which directly address how the U.S. federal government adopts and uses AI.

All of these are designed to encourage and promote the adoption and use of AI by the U.S. federal government and its countless agencies. There is a strong AI governance flavour throughout these laws.

Notable laws and initiatives include:

Executive Order 13960: Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government

This Executive Order was signed by President Trump in December 2020.

It mandated that federal agency use of AI must be “safe and secure” and that AI use cases must be “accurate, reliable, and effective”.

The purpose of this was to promote AI adoption in a manner that fosters public trust.

This law is designed to promote and facilitate the adoption of AI technologies across U.S. federal agencies.

It did this by establishing an AI Centre of Excellence within the General Services Administration, as well as requiring OMB to publish an AI memorandum to federal agencies.

In April 2025, the OMB, which directly assists the President, published two memoranda, on federal agency use and acquisition of AI respectively. These memoranda rescinded the equivalent 2024 memoranda which OMB published on the same topics, following the mandate issued in President Trump’s January 2025 AI Executive Order.

Although these memoranda are targeted exclusively at U.S. federal agencies, they contain many useful elements and practical insights and nuggets which AI governance professionals can use to develop and mature their internal organisational frameworks.

The two OMB memoranda also emphasise the importance of AI governance and risk management to a greater degree than any other output from the current administration to date.

OMB MEMORANDUM M-25-21: Accelerating Federal Use of AI through Innovation, Governance, and Public Trust

Published by the OMB on 3 April 2025. Rescinds OMB Memorandum M-24-10.

Federal agency AI adoption is elevated as top strategy priority.

Federal agencies are required to develop AI strategies which outline how they will increase their AI maturity and adopt new AI use cases which benefit the public and reduce costs.

To support this, federal agencies are strongly encouraged to reuse and share AI code, models, and data assets, to increase efficiencies, drive collaboration, and boost innovation.

There are also new requirements to promote AI literacy and training across the federal workforce.

Despite the emphasis on innovation and accelerating AI adoption, there are important AI governance requirements–including pre-deployment testing, AI impact assessments, continuous monitoring, and human oversight–for high-impact AI use cases.

Use of AI is classified as “high-impact” when it serves as the “principal basis for an agency decision or action”.

A lengthy but non-exhaustive list of high-impact AI is provided, which includes AI used for physical movement of robots, control of access to government facilities, the blocking of protected speech, and AI-enabled medical devices.

Although responsible AI adoption is key, agencies must ensure AI governance is efficient, risk-based, leverages existing processes, and does not stifle innovation.

OMB MEMORANDUM M-25-22: Driving Efficient Acquisition of AI in Government

Published by the OMB on 3 April 2025. Rescinds OMB Memorandum M-24-18.

The OMB acknowledges that use of externally acquired AI is a key aspect of federal agency AI adoption.

The policy position is to support the U.S. AI industry by buying U.S. AI products and services wherever possible.

However, agencies must avoid vendor lock-in, negotiate contracts which protect U.S. interests, and restrict and control how government data and intellectual property is used for AI training.

This memorandum provides detailed guidance and requirements that federal agencies must adhere to during each stage of the AI acquisition lifecycle, defined as:

Identification of requirements

Market research and planning

Solicitation development

Selection and award

Contract administration

Contract closeout

NIST and the U.S. AI Safety Institute

The National Institute of Standards and Technology (NIST) has been the most active U.S. government agency on AI governance related issues. NIST, which is part of the U.S. Department of Commerce, is one of the most influential standard-setting organisations worldwide.

NIST released its AI Risk Management Framework (AI RMF) in January 2023. It has since become one of the most widely used frameworks in the field of AI governance.

The AI RMF outlines a practical approach to AI governance, which organisations can use to manage AI risks and design and develop their frameworks. Its structure centres on four core functions: i) Govern, ii) Map, iii) Measure, and iv) Manage. Several tangible actions and controls are recommended across each function, corresponding to different aspects of AI risk management, incuding policies and processes.

The U.S. AI Safety Institute, which is part of NIST, was launched in November 2023, following President Biden’s Executive Order on AI safety. Its mission is to “identify, measure, and mitigate the risks of advanced AI systems”. Thus far, it has produced technical research materials and engaged with partners in other countries via the International Network of AI Safety Institutes, which it convened last year.

Below is a snapshot of AI governance and safety resources developed and published by NIST and the U.S. AI Safety Institute:

Four Principles of Explainable AI (September 2021)

Towards a Standard for Identifying and Managing Bias in Artificial Intelligence (March 2022)

NIST AI Risk Management Framework (January 2023)

Reducing Risks Posed by Synthetic Content: An Overview of Technical Approaches to Digital Content Transparency (April 2024)

AI Risk Management Framework: Generative AI Profile (July 2024)

Secure Software Development Practices for Generative AI and Dual-Use Foundation Models (July 2024)

Managing Misuse Risk for Dual-Use Foundation Models (January 2025)

Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations (March 2025)

What’s coming next?

The U.S. AI policy landscape is, as ever, a moving target. Trump’s 2025 shift may have been fatal for much of Biden’s AI governance and safety agenda, but it has not yet impacted the Biden era AI export control and investment restrictions regime.

Furthermore, despite the current administration's buccaneering, pro-innovation rhetoric, an emphasis on responsible federal government use of AI persists. Alongside this, NIST (and the U.S. AI Safety Institute) continues to publish important and useful AI governance materials.

The next key milestone is the comprehensive AI Action plan, which is due to be announced this summer. After this announcement, we will learn which other AI laws and initiatives will be consigned to history, and what is taking their place. However, for now, we can safely rule out any comprehensive federal AI governance law in the U.S.