Hey 👋

I’m Oliver Patel, author and creator of Enterprise AI Governance.

This free newsletter delivers practical, actionable and timely insights for AI governance professionals.

My goal is simple: to empower you to understand, implement and master AI governance.

For more frequent updates, follow me on LinkedIn.

This week’s newsletter wraps up the series covering the top 12 papers on agentic AI governance. The vast majority of this series focuses on papers published in just the past few months. This means that it provides analysis and summaries at the cutting-edge and frontier of this space. Check out the rest of the series here:

This post (part 3) features papers on agentic AI and the EU AI Act, how to evaluate AI agent capability levels, and what practical interventions can be applied and implemented to mitigate the novel risks of agentic AI.

Top 12 Papers on Agentic AI Governance - full list

Practices for Governing Agentic AI Systems - Yonadav Shavit et al., OpenAI - December 2023

AI Agents: Governing Autonomy in the Digital Age - Joe Kwon, Center for AI Policy - May 2025

How to Evaluate Control Measures for LLM Agents? A Trajectory from Today to Superintelligence - Tomek Korbak et al., UK AI Security Institute - April 2025

Agentic AI - Threats and Mitigations - OWASP - February 2025

Multi-Agentic system Threat Modelling Guide v1.0 - OWASP - April 2025

Governing AI Agents - Noam Kolt - January 2025

Strengthening AI Agent Hijacking Evaluations - U.S. AI Safety Institute technical staff - January 2025

Infrastructure for AI Agents - Alan Chan et al., Centre for the Governance of AI - January 2025

Fully Autonomous AI Agents Should Not be Developed - Margaret Mitchell et al., Hugging Face - February 2025

AI Agent Governance: A Field Guide - Jam Kraprayoon, Institute for AI Policy and Strategy (IAPS) - April 2025

Characterising AI Agents for Alignment and Governance - Atoosa Kasirzadeh & Iason Gabriel - April 2025

Ahead of the Curve: Governing AI Agents Under the EU AI Act - Amin Oueslati and Robin Staes-Polet - June 2025

BONUS: Initial Reflections on Agentic AI Governance - Oliver Patel - April 2025

Disclaimer: I am not sponsored by any of the below organisations or authors and I receive nothing in return for promoting these papers. All the credit belongs to the original authors and the sources are provided as hyperlinks 😊

Top 12 Papers on Agentic AI Governance - part 3 (papers 10-12)

10. AI Agent Governance: A Field Guide

Jam Kraprayoon, Institute for AI Policy and Strategy (IAPS)

In this report, Jam Kraprayoon provides a comprehensive overview of agentic AI governance. It is one of the most thorough yet accessible contributions on the topic. Rather than focusing on a single dimension, it zooms out and surveys the field more broadly.

The report covers AI agent definitions and key concepts, capabilities and limitations, how to evaluate and measure AI agent performance, the core risks that should be top of mind for practitioners and policymakers, and the most promising interventions for addressing and mitigating these risks.

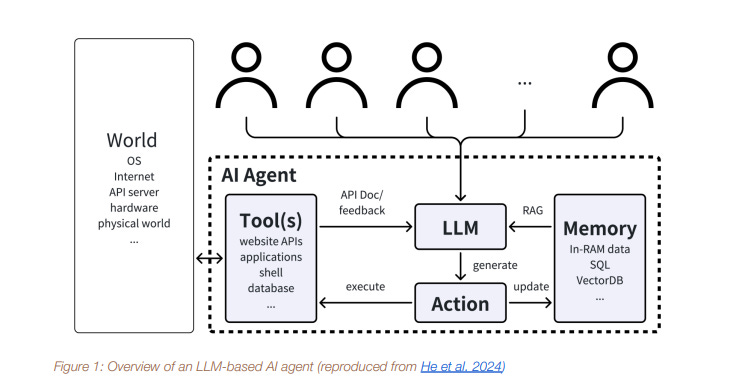

It starts by explaining what a AI agent is, with a handy visualisation provided below.

Image credit: AI Agent Governance: A Field Guide, Institute for AI Policy and Strategy (2025)

Performance and evaluation

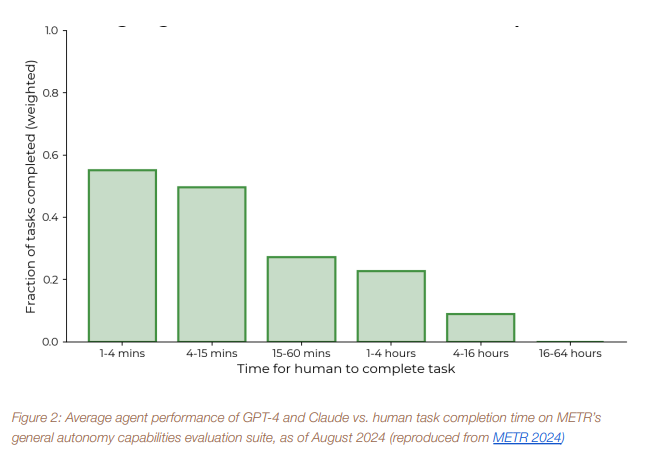

A summary of key findings on agentic AI performance is then outlined. There are various evaluation studies and benchmarks that assess the performance of AI agents on real-world tasks. This includes benchmarks for software engineering, data science, cyber security, and research-related tasks. In general, the performance levels of today’s AI Agents remain relatively limited. Perhaps they are even somewhat underwhelming. For example:

AI agents struggle with complex tasks that are open-ended and/or span longer time periods.

For example, studies highlight that today’s agents struggle on various tasks that take humans longer than 10-15 minutes to complete.

However, in some studies agents successfully execute tasks that take humans up to 4 hours to complete. But there is minimal evidence of agents being effective beyond this time-horizon.

The report highlights that AI agents will still be far cheaper than human workers with the relevant expertise (even factoring in the high compute costs). Therefore, as these performance levels increase, there will be powerful incentives for companies to delegate more of their work to agentic AI.

Another key point is that existing agentic AI benchmarks are most likely overestimating AI performance levels, due to inadequacies in their design. This is because it is difficult to design evaluations which adequately mirror the messy, unpredictable, and ever-changing nature of real-world tasks that require constant adaptation, precise judgement, and ‘common sense’.

Image credit: AI Agent Governance: A Field Guide, Institute for AI Policy and Strategy (2025)

Risks and challenges

Four core risks of agentic AI are highlighted: 1) misuse and malicious use; 2) accidents and loss of control; 3) security risks; and 4) other systemic risks.

1. Misuse and malicious use

The increasingly autonomous capabilities of AI agents that execute impactful actions in the real-world can be manipulated or misused. This could be via taking control of another organisation’s AI agents (i.e., agent hijacking) or deploying agents designed to cause harm or disruption, such as disseminating disinformation or developing biological pathogens.

2. Accidents and loss of control

As AI autonomy levels increase, so do the risks and potential negative impact if things go wrong. Agents can malfunction or perform unreliably, potentially leading to real-world harm, especially if those agents are embedded in physical applications. Furthermore, as agents become increasingly capable and distributed in our activities and work, humans may lose control over the way in which they are deployed and the impact they have, due to our increasing dependence on them and the complexity of disentangling ourselves from this.

3. Security risks

The report notes that there are more attack surfaces for agentic AI systems, given the various components which are scaffolded on top of the core AI models, as well as the communication and coordination channels between agents in multi-agent systems. For example, a rogue agent embedded in a multi-agent system can be used to sabotage and exert control over the overall system’s performance. As agents autonomously execute actions in increasingly sensitive, real-world domains, the cyber security risks are elevated.

4. Other systemic risks

As agents become more widely deployed and embedded across society, taking on increasing amounts of work that previously only humans could do, the risk of mass workforce disruption and displacement increases. This could have myriad economic and societal knock-on effects, including heightened inequality and political instability, as wealth and power becomes further entrenched in the technological elite who develop, own, and manage AI agents and their underpinning infrastructure.

Interventions and mitigations

To tackle and mitigate these risks, the report proposes five themes of intervention, and several practical measures for each one, which serve as promising areas for future AI governance research and practice. These are:

Alignment: measures to align the behaviour and functioning of AI agents with the values and interests of their human developers and wider society.

Control: measures and guardrails to control and restrict the actions that agents can execute and the data or tools they can access or use.

Visibility: measures to ensure that humans understand and oversee the capabilities, limitations, and use of agents, before, during, and after deployment.

Security and robustness: measures and controls to secure agents from external threats and malicious actors.

Societal integration: measures designed to enable human and AI agent co-existence, such as liability and accountability regimes.

The bottom line: the report’s core argument is that although agentic AI amplifies existing AI risks like bias and hallucination, increasing levels of AI autonomy, and the potential loss of human control that could emerge, means that agentic AI governance poses fundamentally new challenges. This is why it is incumbent for AI governance leaders to review their existing frameworks, policies, and processes, and adapt them to adequately address the challenges and risks posed by agentic AI.

11. Characterising AI Agents for Alignment and Governance

Atoosa Kasirzadeh & Iason Gabriel - April 2025

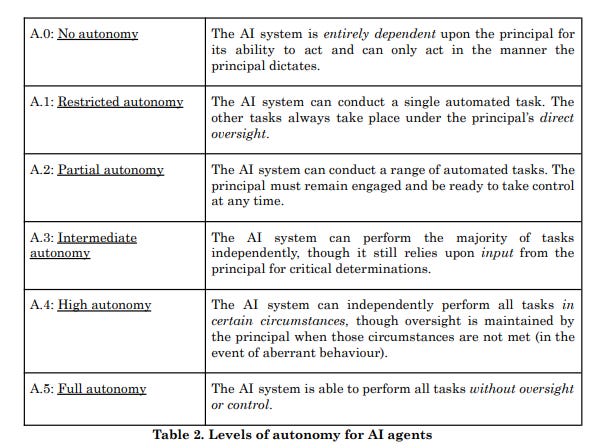

This paper is called “characterising AI agents” because it outlines an accessible and practical framework for evaluating and determining an AI agent’s capability level, by assessing across a range of relevant dimensions.

The authors argue that the properties and capabilities of an agentic AI system (i.e., its capability level) are directly linked to the risk level and should thus determine how it is governed. Therefore, to apply proportionate, tailored, and robust governance, practitioners require a standardised yet nuanced way of evaluating an AI agent’s capability level, so that we can determine what degree of governance is appropriate, and what type of interventions, mitigations, and guardrails should be applied.

The key dimensions for evaluating and classifying an AI agent’s capability level are:

1. Autonomy: to what extent can the agent perform actions without human input or approval?

2. Efficacy: to what extent can the agent have a causal and material impact on the external environment (including both the digital and physical realms)?

3. Goal complexity: to what extent can the agent understand, break down, plan and pursue complex goals, subgoals, and activities?

4. Generality: to what extent can the agent perform different types of tasks and activities, and operate effectively across different domains.

For each dimension, the authors provide a set of criteria that enable you to score the agent’s level. This enables an overall assessment AI agent capability level classification, across the four dimensions. For example, the below image highlights how you can classify an agent’s autonomy level.

Image credit: Characterising AI Agents for Alignment and Governance, Atoosa Kasirzadeh & Iason Gabriel (2025)

In sum, the higher the levels of autonomy, efficacy, goal complexity, and generality, the more capable an agent is, and the greater risks it poses. Therefore, it is crucial to understand what your agents can (and cannot do), before you decide how to govern them. An AI agent that has read-only access to a database and can retrieve and summarise information is very different to a multi-agent system that processes payroll, updates payslip records, and initiates payments.

The bottom line: This paper proposes an actionable framework for agentic AI evaluation. You can incorporate this into your AI risk assessment process, to enable practitioners to evaluate the capability level, risks, and appropriate level of governance that should be applied to AI agents. By applying this framework, AI governance leaders can ensure that those AI agents are governed in a proportionate, tailored, and risk-based manner. This is critical, given the inevitable widespread adoption of agentic AI across the enterprise, the economic incentives to streamline and automate work, and the democratised way in which such agents will be developed with low- and no-code tools.

12. Ahead of the Curve: Governing AI Agents Under the EU AI Act

Amin Oueslati and Robin Staes-Polet, The Future Society - June 2025

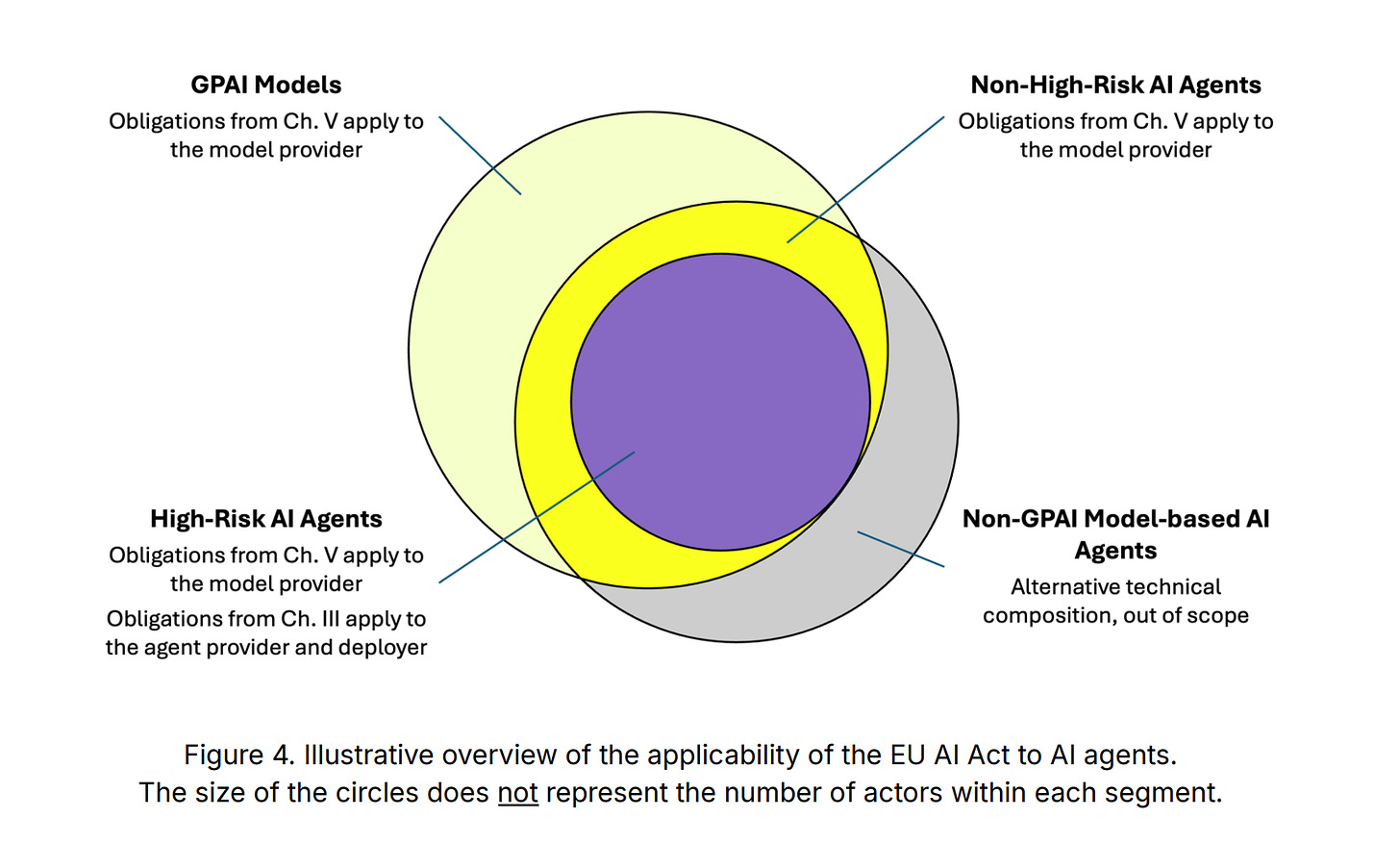

This paper is the first detailed contribution on how the EU AI Act regulates and governs agentic AI. Therefore, it is an important read for anyone working in an organisation that is simultaneously navigating AI Act compliance readiness and experimenting with and adopting agentic AI.

Although the EU AI Act does not explicitly mention AI agents, it does govern them in various ways. See the below image for a visual breakdown.

Image credit: Ahead of the Curve: Governing AI Agents Under the EU AI Act, The Future Society (2025)

The report argues that many of the obligations for providers of general-purpose AI (GPAI) models are relevant for AI agents. This is because the agents themselves are based upon the foundation models which these provisions target.

For example, the GPAI model provider obligations for transparency regarding training data, implementing copyright compliance policies, and maintaining technical documentation, are relevant for agentic AI. If the base model is a GPAI model with systemic risk, additional obligations are required, including risk assessment, safety and security testing, red-teaming, and incident reporting.

In some cases, an agentic AI system could also (or alternatively) be classified as a high-risk AI system. In this scenario, the obligations and requirements for providers and deployers of high-risk AI systems apply. This includes the obligation for deployers to implement human oversight, which could be challenging. Organisations should exercise caution if using AI agents, as part of a high-risk AI system, to perform important tasks or make decisions, as some degree of human oversight is legally required. No matter how autonomous AI becomes, it does not change the fundamental provisions of the AI Act.

The report highlights that the AI use case determines whether or not an AI agent is a high-risk AI system, and that providers may deliberately prohibit deployers, in the Acceptable Use Policy, from using the AI agent for high-risk use cases, so that they are not subject to the provider obligations.

The bottom line: leading AI governance frameworks, like the EU AI Act, were developed before LLM-based AI agents were being widely deployed by organisations. For this reason, it does not explicitly address how the novel risks of agentic AI should be mitigated. However, despite no explicit reference to AI agents, this report highlights that there are various ways in which the AI Act regulates the increasingly autonomous agentic AI systems that are proliferating today.