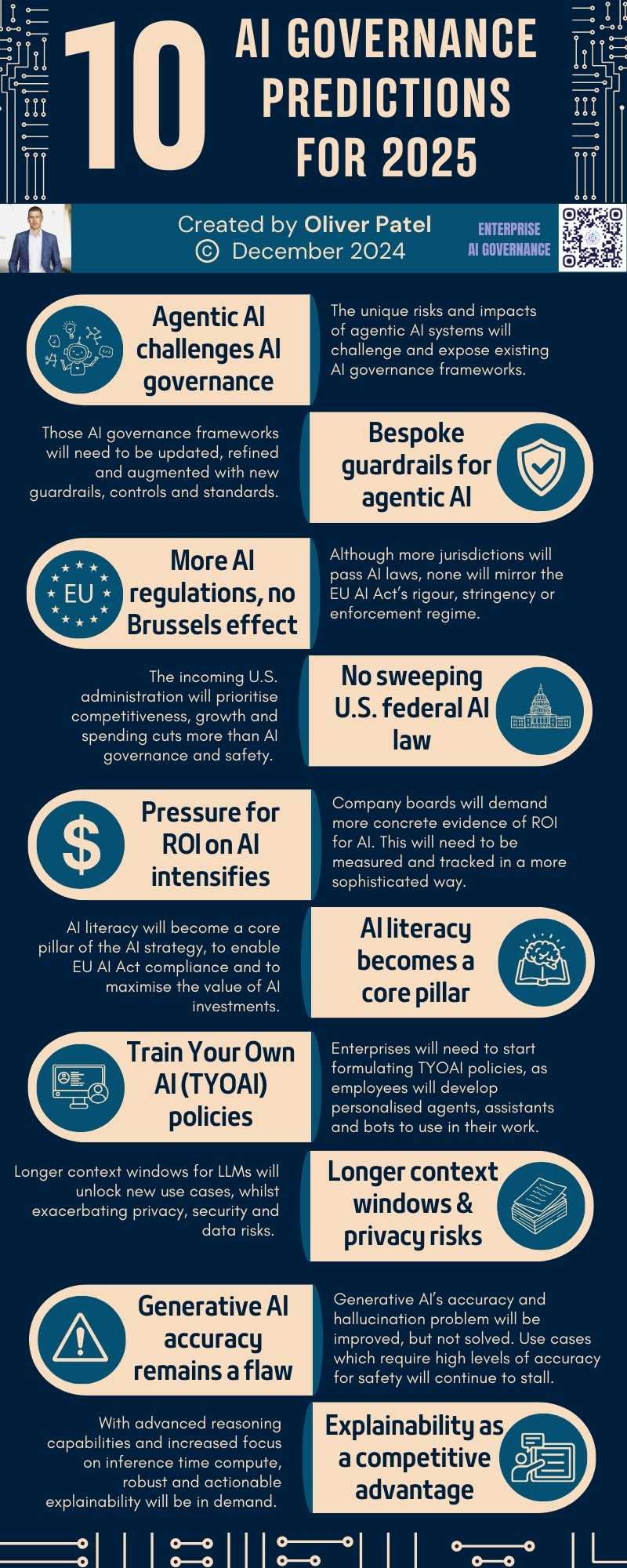

10 AI Governance predictions for 2025

What's coming next? | Edition #3

Hey 👋

I’m Oliver Patel, author and creator of Enterprise AI Governance.

This free newsletter delivers practical, actionable and timely insights for AI governance professionals.

My goal is simple: to empower you to understand, implement and master AI governance.

I am thrilled that over 850 professionals have subscribed to this newsletter in the past two weeks. If you haven’t already, sign up below and share it with your colleagues and friends. Thank you to all!

For more frequent updates, follow me on LinkedIn.

I hope you have all had a wonderful and restful holiday season.

This time of year is awash with predictions for 2025 and I could not resist getting involved.

This week’s newsletter looks ahead to next year, covering:

✅ Top 10 AI governance predictions for 2025

✅ 3 practical tips to navigate the year

✅ Cheat Sheet of the week: 10 AI governance predictions for 2025

I hope that the usefulness and value of these predictions will not be assessed by how many came true (because it definitely won’t be all of them), but by how effectively they enabled you to know what to prioritise, focus on and look out for throughout the year.

Top 10 AI governance predictions for 2025

Agentic AI will challenge AI governance frameworks. 2025 has already been declared by many as the year of agentic AI. The shift from generative AI to agentic AI is significant, as AI systems will progress from merely generating and creating content, to autonomously developing plans, solving problems and executing tasks in a range of different applications. Although these systems may be based on the same underlying technology and models as generative AI, agentic AI nonetheless signals a meaningful shift in how AI is used, the risk it poses and the real-world impact it can have. Existing AI governance frameworks, like the EU AI Act, NIST AI Risk Management Framework and ISO 42001, and the corresponding controls which organisations have implemented, will be challenged and exposed by the unique risks and impacts of agentic AI. This will be especially true for AI risk assessments, human oversight and monitoring, which will have to become even more adaptive, dynamic and continuous.

Bespoke guardrails will emerge for agentic AI. Novel guardrails, controls and standards will be required to address and mitigate the unique risks of agentic AI. Although existing approaches to AI governance will remain relevant, these will need to be updated, refined and augmented with a new suite of guardrails. For example, guardrails and permissions will be needed to determine and restrict which applications and data sources AI agents can access, retrieve from, and integrate with. Similarly, thresholds will need to be set to control which type of tasks and actions AI agents can and cannot perform, and which other AI agents they should work with to execute these tasks. Consider a scenario where a travel company uses an AI agent to book and amend trips for customers, based on their natural language prompts. That company may want to consider not allowing the AI agent to autonomously book anything without customer approval, to always provide multiple options, or to not book anything above a certain overall cost or with an inflexible cancellation policy. And they certainly would not want that AI agent to share that customer’s itinerary with other customers.

More AI regulations, but no Brussels effect. In 2025 we will see more jurisdictions passing laws and regulations which aim to mitigate AI risks and promote trustworthy AI. For example, AI laws are currently progressing through the respective legislative processes in Brazil, Canada, Peru, Taiwan, Turkey and Vietnam. Whilst many countries have undoubtedly been inspired by the EU AI Act and its risk-based approach, no country will go as far as the EU. Concerns regarding domestic competitiveness, innovation and economic growth will dissuade countries from adopting AI laws, and enforcement regimes, which are as comprehensive, stringent and rigorous as the EU AI Act. In many jurisdictions, these concerns will trump the need to align with EU standards to derive trade benefits, which is one of the key drivers of the Brussels effect.

There will be no sweeping federal U.S. AI law. The comprehensive victory of President Trump and the Republican Party in November’s elections means it is less likely than ever that the U.S. will pass a federal AI law which aims to promote responsible AI. For the incoming U.S. administration, competitiveness, innovation and maintaining its position as the world’s AI leader will be top of mind. Despite the many submitted proposals for federal AI laws, we will continue to see an increase in the patchwork of state laws and corresponding regulatory complexity for firms operating across the U.S. Furthermore, the push for government efficiency and federal budget cuts, spearheaded by Elon Musk, will be intense. Against this backdrop, there is a risk that some of the federal initiatives and investments on AI governance and safety, many of which stemmed from President Biden’s Executive Order 14110, could be curtailed.

Company boards will demand return on investment (ROI) for AI. ROI will be one of the key words in 2025. Since 2022, when ChatGPT was released and generative AI went mainstream, there has been ample goodwill towards AI in company boardrooms. You would be hard pressed to find a corporate leader who does not agree that AI is pivotal to the future success and competitiveness of their company. However, this goodwill cannot be taken for granted and will not last forever. Company earnings are reported each quarter, not each decade. Despite the obvious long-term potential of AI to transform every industry, the pressure to demonstrate the ROI on the recent investments which have been made in generative AI will intensify. In response, the way in which value and ROI is measured, assessed and tracked will become more sophisticated and standardised. Moreover, smart companies will pivot from the scattergun approach of hundreds of use cases, to focusing on a selection of strategic pillars, which have the potential to transform their business.

AI literacy will become a core pillar of the AI strategy. Leading organisations understand that merely providing employees with access to cutting edge AI tools, and expecting transformative advancements in efficiency, productivity and innovation, is not going to cut it. Forward thinking approaches to AI literacy centre around empowering employees to understand how they can adopt and embrace AI, and how humans and AI can work together most effectively. To prepare for the February 2025 EU AI Act compliance deadline, and perhaps, more importantly, to maximise the value of AI investments, AI literacy will become a core pillar of the enterprise AI strategy and associated implementation programmes. To be effective, this will have to go beyond generic company training. I will provide a thorough explanation of my ‘4 Layers of AI Literacy Framework’ in a future edition of Enterprise AI Governance.

Train your own AI (TYOAI) policies: for personalised agents, assistants and bots. For many years, organisations have had BYOD (bring your own device) policies. In 2025, organisations will need to start thinking about BYOAI policies, or perhaps more fitting, TYOAI (train your own AI). It will not be long before non-technical employees can train and fine-tune AI models on their own work, documents and communication, so that they are optimised to generate content which bears a close resemblance to their own. If you sprinkle no code, drag and drop AI agent builder tools into the mix, employees will also be able to integrate their personally trained AI models with ‘external’ applications, like email, calendar and productivity software, so they can autonomously ‘outsource’ specific tasks, like replying to emails and scheduling meetings. Although all of this may not become a reality in 2025, it is certainly on the horizon and thus needs to become a focus for enterprise AI governance professionals. In a world where we can all train our own AI, meaningful AI literacy will be non-negotiable.

Longer context windows will unlock new use cases, whilst exacerbating privacy and security risks. One of the key technical advancements we will see in 2025 is increasingly long context windows, which enable language models to have near infinite memory. Put simply, a context window is the amount of information which an LLM can process and ‘remember’ in each input, which can in turn improve the quality, accuracy and usefulness of the output. OpenAI’s ‘original’ GPT 3.5 model had a maximum context length of 4k tokens. For the new o1 models, it is 128k tokens. Extremely long context windows can enable huge amounts of information and documents to be included in each prompt, as well as enabling the LLM to recall and leverage all previous interactions with the user. This will lead to more powerful and personalised AI applications, but it will also exacerbate privacy risks relating to lawful processing, data retention, deletion, and the right to be forgotten. If everyone can input huge amounts of documents into each prompt, the risks of unlawful data processing (e.g., inputting restricted or confidential documents) will increase. Machine unlearning will therefore continue to be an active and important field of research.

Generative AI’s accuracy and hallucination problem will be improved, but not solved. In 2025, AI governance professionals will (and should) continue to be wary of green-lighting generative AI use cases and applications which require extremely high levels of accuracy. Despite all of the techniques and research aimed at improving the accuracy problem -- such as RAG (retrieval augmented generation), fine-tuning, longer context windows, output guardrails and confidence scores -- by the end of 2025, I do not think we will be using generative AI in contexts where erroneous or unreliable information is a mission critical safety risk. This is due to the fundamental, non-deterministic nature of generative AI models, which can generate different outputs based on the same inputs. Although I would not go out on a limb on this one (hence why it is not a standalone prediction), perhaps traditional, discriminative approaches to machine learning will become fashionable again, due to enhanced accuracy, reliability and explainability for certain enterprise use cases.

Explainability will become a competitive advantage. AI labs and companies are currently talking up the advances in the reasoning capabilities of their models. Whilst highly advanced and human-like AI reasoning is a long way off (and perhaps unattainable with existing AI model architectures), increased reasoning capabilities should lead to more sophisticated explainability and interpretability. Chain of thought, for example, will continue to be advanced as a technique which enables AI models to use reasoning to solve complex problems, by breaking those problems up into a sequence of logical steps which need to be followed. Furthermore, advances in inference time compute will complement these efforts. By increasing the amount of time which AI models spend processing inputs and calculating which output (i.e., prediction) to generate, there will be an expectation of increased reliability, accuracy and reasoning propensity. There will also be an expectation of advanced explainability and interpretability, given that the model is taking longer to generate the output and following a methodology which more closely resembles human reasoning. Embedding this explainability into AI products and services will become a competitive advantage, and will increasingly be seen as mission critical for high-risk enterprise use cases, in domains like healthcare and finance.

3 practical tips to navigate the year

Don’t try to read everything. There is way too much going on in AI governance to keep up with it all. In 2025, your filter focus needs to be razor sharp. Zoom in on what matters most for your business, sector, jurisdictions, and customers. This newsletter and my AI Cheat Sheets are designed to help you cut through the noise.

Don’t reinvent the wheel and build everything from scratch. Many organisations are creating AI governance frameworks, toolkits, open source libraries, guardrails, policy templates, checklists and other valuable resources, which you can leverage for your AI governance work. There is no need to build everything from scratch. Throughout 2025, I will be sharing some of the most useful and openly available resources on this newsletter.

Choose your AI providers wisely, because ignorance isn’t an excuse. With the increasing scrutiny on foundation models and model developers, including publicly available model cards, benchmarks, safety reports and other studies, including from AI Safety Institutes, there is no excuse for utilising and purchasing AI models which have not been designed and developed with safety as a top priority.

Thanks for this insightful content - once again. Very much appreciated would be a collection of the mentioned ressources of toolkits & AI governance templates. I have struggled creating one for clients and would very much appreciate drawing on a larger pool of ressources! Thanks in advance.

Great predictions, Oliver.

We are looking forward to them.

Thank you for putting these together.